This is the multi-page printable view of this section. Click here to print.

Hachyderm Blog

- Announcements

- Posts

- Hachyderm and Nivenly

- The Israel-Palestine War

- A Minute from the Moderators

- A Minute from the Moderators

- A Minute from the Moderators

- Stepping Down From Hachyderm

- A Minute from the Moderators

- A Minute from the Moderators

- Decaf Ko-Fi: Launching GitHub Sponsors et al

- Growth and Sustainability

- Leaving the Basement

- Incidents

Announcements

Threads Update

What is Threads?

Threads is an online social media and social networking service operated by Meta Platforms. The app offers users the ability to post and share text, images, and videos, as well as interact with other users’ posts through replies, reposts, and likes. Closely linked to Meta platform Instagram and additionally requiring users to both have an Instagram account and use Threads under the same Instagram handle, the functionality of Threads is similar to X (formerly known as Twitter)1 and Mastodon.

What is the status of their ActivityPub implementation?

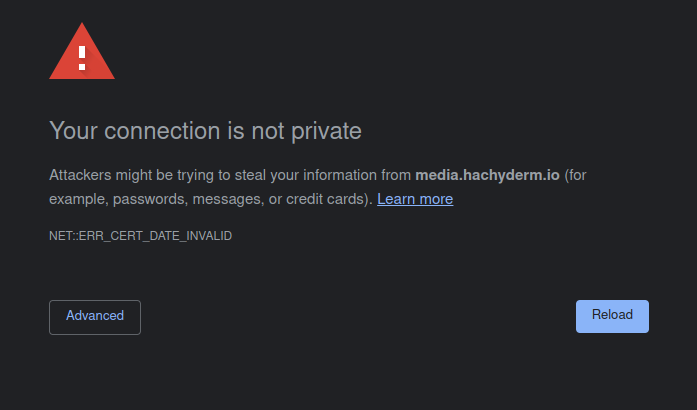

As of December 13, 2023, Threads has begun to test their implementation of ActivityPub. As of December 22, 2023, only seven users from Threads are federating with Hachyderm’s instance. For all other users on Threads, we are seeing that the system is not federating correctly due to certificate errors on Threads side. We understand that they are working to resolve those certification issues with assistance from the Mastodon core team.

Based on the available Terms of Use and Supplemental Privacy Policy provided by Meta, they are not selling any of the data they have. This is not official legal or privacy advice for individual users, and we recommend evaluating the linked documents yourself to determine for yourselves.

With regards to the section in the privacy policy

Information From Third Party Services and Users: We collect information about the Third Party Services and Third Party Users who interact with Threads. If you interact with Threads through a Third Party Service (such as by following Threads users, interacting with Threads content, or by allowing Threads users to follow you or interact with your content), we collect information about your third-party account and profile (such as your username, profile picture, and the name and IP address of the Third Party Service on which you are registered), your content (such as when you allow Threads users to follow, like, reshare, or have mentions in your posts), and your interactions (such as when you follow, like, reshare, or have mentions in Threads posts).

It’s important to remember a few things:

- The Mastodon/ActivityPub at their core uses a form of caching of information in order to make the process as seamless as possible. For example, when you create a verified link on your profile, every instance that your profile opens on does its own checks of the links and saves the validation on that third party server. This helps prevent malicious actors from falsifying their verified links that would then trickle out to other instances.

- We don’t transmit user IP’s to any third party instances as part of your interaction. If Meta is able to collect your IP, it would be through a direct interaction with a post on their server or CDN.

How does this impact Hachyderm?

At this point, Threads tests of the ActivityPub do not impact us directly. Based on the available information, they haven’t breached any rules of this instance, they aren’t selling any of the data as discussed above, and the user pool is so limited that even if they did, our team’s ability to moderate that would be quick and decisive. In addition, any users that do want to block Threads at this time, can follow the instructions in the next section to pre-emptively block Threads at their account level.

As a result, we will continue to follow our standard of monitoring each instance on a case by case to see how the situation evolves, and if a time comes that we see Threads federations as a risk to the safety of our users and community, we will defederate at that time.

Indirectly, we know that admins of other instances have expressed that they will defederate with any instances that will continue to federate with Threads. While we hope that the information in this blog post has helped people understand the currently limited risk of continuing to federate with Threads, we also know that other instances have a much more limited set of resources and may need to preemptively defederate with the Threads instance. The beauty of the Fediverse is that each instance has that right and ability.

How to block Threads.

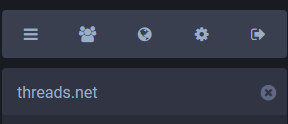

- Search for “threads.net” in the search box

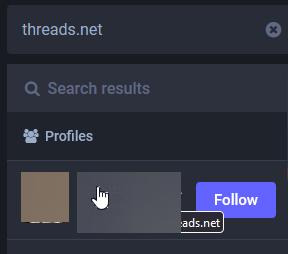

- Select a user from the results

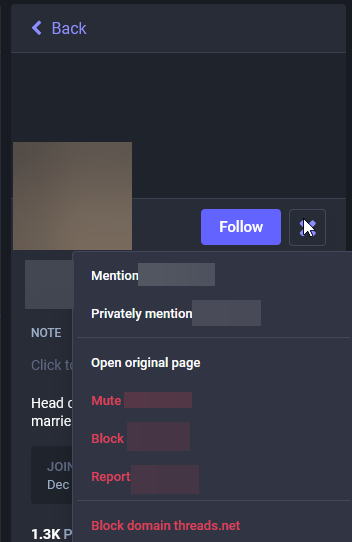

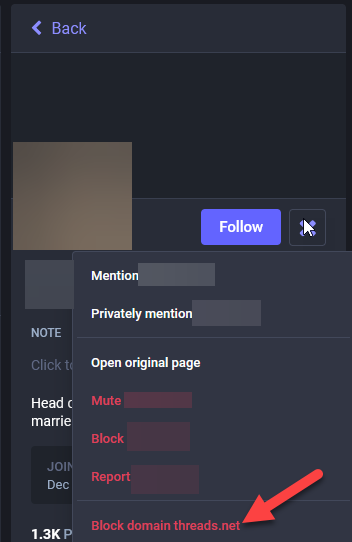

- Open the menu from the profile

- Select “Block domain threads.net

- Read the prompt and select your desired action

To understand the ramifications of blocking an instance, please review the Mastodon documentation for details on what happens.

Next Steps

As Threads continues to implement their integration with ActivityPub and the Fediverse at large, we will watch how those users integrate with our community and how their service interacts with our servers. If you would like to learn more about our criteria for how Hachyderm handles federating with other instances, please review our A Minute from the Moderators - July Edition where we list out our criteria.

Crypto Spam Attacks on Fediverse

The Situation

Starting around 8 May 2023, we began to receive reports that Mastodon Social was being inundated with crypto spam.

Initially, it appeared that only Mastodon Social, and then Mastodon World, were impacted. In each case we Limited the instance and made a site-wide announcement. As the issue progressed, it became clear that more instances were being targeted for this same style of crypto spam. As a result, we have decided to change our communication strategy to utilize this blog post as a source for what’s happening and who is being impacted, rather than relying on increasingly frequent site-wide announcements.

As it stands: right now we have seen waves of spam from Mastodon Social, Mastodon World, and now TechHub Social. These waves usually mean that we receive over 100-200 reports in less than a few hours. (By contrast, we usually receive ~20 reports per week.)

What this means for Hachydermians (and Mastodon users in general)

Spam attacks seem to make use of open federation to either find accounts to misuse follow/unfollow behaviors, DMs, comments, and other invasive behaviors. In general, Limiting a server is sufficient for mitigating the impacts of these behaviors. Limiting means that Hachydermian’s posts no longer show up in the Federated feeds of impacted instances, which means that bots can no longer use the Federated feed as a vector for malicious behavior. While this is a good thing and means that these bots will no longer be able to spam Hachydermians, the Limit works both ways. This means:

- The posts for Limited instances will no longer show up on the Federated feed

- You will receive approval requests for all accounts on Limited instances

- User profiles will appear to have been “Hidden by instance moderators”

The UI messages for the latter two are a little difficult at times to determine what it means. Essentially, you will see the same message for a user to follow you from an instance that’s been Limited, and for you to view their profile page, as you would if we had only Limited that specific user.

For users on the impacted instances, these messages should not be taken as the individual user has engaged in any sort of malicious activity. In general, when we see individual-level malicious activity, we suspend federation (block) the individual user rather than Limit them. Instead, these messages are only a consequence of us needing to Limit the servers while they are doing their best to manage the spam attacks they are undergoing.

The impacted instances

We are maintaining the list of instances that we are Limiting as a result of the current crypto spam attack here. Note that this is not all instances we currently have Limited for any reason, only the ones that are experiencing this specific scenario. We will continue to announce when new instances are added to this list via our Hachyderm Hachyderm account and link back to this blog post. Instances that are no longer impacted will be un-Limited and removed from the list below. (When the list is empty, that means that all instances have been un-Limited.)

Updates

Update 25 May 2023 - we’ve been crypto spam free from Mastodon Social and Mastodon World, so we’ve gone ahead an un-Limited those instances.

Update 2 Jun 2023 - we’ve been crypto spam free from TechHub Social, so we’ve gone ahead and un-Limited that instance! That’s the last one, so this incident is resolved.

Updating Domain Blocks

Today we are unblocking x0f.org from our list of suspended instances to federate with.

Hachyderm will begin federating with x0f.org immediately.

Reason for suspending

We believe the original suspension was related to early moderation actions taken earlier in 2022. The moderation actions took place before Hachyderm had a process/policy in place to communicate and provide reasoning for the suspension.

Reason for removing suspension

According to our records, we have no reports on file that constitute a suspension of this domain. The domain was brought to our attention as likely flagged by mistake. After review we have determined that there is no reason to suspend this domain.

A Note On Suspensions

It is important to us to protect Hachyderm’s community and our users. We may not always get this right, and we will often make mistakes. Thank you to our dedicated users for surfacing this (and the other 13 domains) we have removed from our suspension list. Thank you to the broader fediverse for being patient with us as we continue to iterate on our processes in this unprecedented space.

Opening Hachyderm Registrations

Yesterday I made the decision to temporarily close user registrations for the main site: hachyderm.io.

Today I am making the decision to re-open user registrations again for Hachyderm.

Reason for Closing

The primary reason for closing user registrations yesterday was related to the DDoS Security Threat that occurred the morning after our Leaving the Basement migration.

The primary vector that was leveraging Hachyderm infrastructure for perceived malicious use, was creating spam/bot accounts on our system. Out of extreme precaution, we closed signups for roughly 24 hours,

Reason for Opening

Today, Hachyderm does not have a targeted growth or capacity number in mind.

However, what we have observed is that user adoption as dropped substantially compared to November. In my opinion, I believe that we will see substantially less adoption in December than we did in November.

We will be watching closely to validate this hypothesis, and will leverage this announcement page as an official source of truth if our posture changes.

For now we have addressed some more detail on growth, registrations, and sustainability in our Growth and Sustainability blog.

Posts

Hachyderm and Nivenly

Hey Hachyderm!

We’re continuing to post on more important topics as previously promised. For today’s topic, we wanted to cover something that we know a lot of people have had questions about, the relationship between Hachyderm and Nivenly. Our discussion will focus on the relationship structure, our commitment to Nivenly, and our assurance to the community.

Structure of Nivenly and Hachyderm

The Nivenly Foundation is founded on the principle that project maintainers should share in their projects’ success. This foundation brings sustainable governance to open source projects and communities around the globe and supports the maintainers’ independent oversight of their projects. In addition, Nivenly is a decentralized, democratically-governed non-profit technical organization, that focuses on building an equitable future for technology communities.

In 2023, Hachyderm was transitioned from individual private ownership to stewardship under Nivenly. This transition helped to give the Hachyderm the financial structure needed to get to a stable point. This included access to a non-profit organization that can help facilitate donations and help us handle the legal requirements that come with scaling.

Nivenly’s relationship to Hachyderm is comparable to the Cloud Native Computing Foundation (CNCF) stewardship of Kubernetes. The parallels in our relationship do not stop at structure, but extend to our mission as well. Having accepted Kubernetes as its first project in 2015, CNCF provides a neutral home for Kubernetes, fostering its growth and ensuring its development through a community-driven, collaborative approach. This relationship has facilitated Kubernetes’ evolution into a leading platform for container orchestration, widely adopted in the industry for deploying and managing applications in cloud environments. Nivenly aims to enable the same growth in safe spaces through Hachyderm.

Our commitments to Nivenly

Our commitment to Nivenly is plain and simple: Ensure that hackers, professionals, and enthusiasts that are passionate about life, respect, and digital freedom are provided a safe space where they can find peace and balance.

While this is a tall order, everyone at Hachyderm believes and has a commitment to deliver on this vision.

Our assurance to the Community

Over the months, we have made a number of posts discussing the moderation process and the team as a whole. You can find more details from last May’s Moderator Minutes https://community.hachyderm.io/blog/2023/05/08/a-minute-from-the-moderators/. We won’t be rehashing the details of those posts here. Instead, we want to share with the community how our relationship with Nivenly impacts the decisions that we make as a moderation team.

We look to Nivenly for reassurance that we are acting in a way that aligns with the stated commitment. In addition, Nivenly supports us in ensuring that we have the support necessary to deliver on those commitments. However, Nivenly doesn’t otherwise participate in the day to day of Hachyderm’s operations. We firmly believe that this distinction is of utmost significance. By enabling Hachyderm to have the independence to operate independently, we can ensure the decisions that we made do the best to represent the community as a whole.

Disclaimer: There are people who volunteer at both Nivenly and Hachyderm, but their roles are separated by the tasks that they are completing.

The Israel-Palestine War

Hey Hachyderm,

Before we get started, we wanted to acknowledge that it’s been a while since we’ve written a blog post. Thank you for your patience! We’ve made a content plan and you should expect to see more activity through the blog starting this month covering a range of topics.

Today we wanted to discuss the ongoing war between Israel and Palestine. There are a lot of emotions tied to the conversation because it hits home for many of us.

Where We Stand

Firstly, we want to make it clear that we’re heartbroken by the violence and the loss of innocent lives, and we stand firmly against war. The devastation and suffering caused by this war are tragedies, and we believe in the importance of peace and understanding to resolve disputes.

Speaking Up, With Respect

We uphold the right of our community members to express their views and critique political structures. However, we feel it’s important to remind our community that the guidelines don’t allow for blanket statements or sweeping claims about religions or people of a specific faith. It’s important to separate the actions of a government from the beliefs of individuals.

When it comes to the Israel-Palestine war, we know religion is a big part of society for both sides. However, let’s not forget that religions aren’t defined solely by these governments, even if their leaders are prominent figures with loud voices. As moderators, we support Hachydermians when they need to vent or criticize situations and decisions. That said, sweeping generalizations and intolerance will not be supported.

As we continue to discuss this topic, let’s strive to promote understanding, empathy, and respect. Remember, behind every post and comment there’s a real person that we could learn from and grow with through respectful and open-minded conversations.

We recommend reviewing the Give Yourself permission section of our Mental Health and Boundaries document for help and ideas to support yourself when having conversations involving long term crises: https://community.hachyderm.io/docs/hachyderm/mental-health/#give-yourself-permission

Warmly,

Your Hachyderm Moderators

A Minute from the Moderators

We hope everyone had a wonderful and safe new year celebration!

In today’s moderator minutes we will be focused on the continuing conversations around Threads and how Hachyderm moderation is continuing to expand our collaboration with our community.

What’s going on with Threads?

Status

As of December 13, 2023, Threads has begun to test their implementation of ActivityPub. As of January 5, 2023, there are nine users from Threads are federating with Hachyderm’s instance. This is an increase of two users from our prior report.

When reviewing their available Terms of Use and Supplemental Privacy Policy provided by Meta, we don’t see any significant changes. This is not official legal or privacy advice for individual users and we recommend evaluating the linked documents yourself to determine for yourselves.

It’s important to remember a few things:

- The Mastodon/ActivityPub at their core uses a form of caching of information in order to make the process as seamless as possible. For example, when you create a verified link on your profile, every instance that your profile opens on does its own checks of the links and saves the validation on that third party server. This helps prevent malicious actors from falsifying their verified links that would then trickle out to other instances.

- We don’t transmit user IPs to any third party instances as part of your interaction. If Meta is able to collect your IP, it would be through a direct interaction with a post on their server or CDN.

For more details, review our original update about Threads

Limiting Threads

Based on user concern, we are shifting to a Limited status with Threads.

We have included the definition of limit below that comes from the mastodon documentation.

A limited account is hidden from all other users on that instance, except for its followers. All of the content is still there, and it can still be found via search, mentions, and following, but the content is invisible publicly.

What this means:

- You will still be able to follow people from Threads when they federate those users

- You will not see Threads posts on your timelines unless you follow the user

- You will be required to approve all followers from Threads

- You will be required to accept a prompt before viewing a Threads user’s account unless you are following them

Next Steps

As Threads continues to implement their integration with ActivityPub and the Fediverse at large, we will watch how those users integrate with our community and how their service interacts with our servers. If you would like to learn more about our criteria for how Hachyderm handles federating with other instances, please review our A Minute from the Moderators - July Edition where we list out our criteria.

Very, very quick Q&A on Threads

Q: What happens if there is a problematic account on Threads that is federating?

A: They will be blocked by our existing moderation policies.

Q: What happens if there are multiple problematic accounts on Threads that are federating?

A: Depending on the volume, we will either block all those individual accounts or Threads as a whole.

Q: I am concerned about anti-trans and other hate content that may come from Threads.

A: We are too! And we continue to monitor the instance for any of these behaviors. If and when that type of content appears, we will block it the way we do with any other instance.

Q: Why are you giving Threads “a chance”? We know about the parent company.

A: We’re not giving Threads “a chance” so much as meeting the current situation where it’s at with plans to continue to adapt as the situation changes. Since there are 9 users, we’re treating this instance like a 9 user instance. In cases with instances that are ~50 users or less, unless the instance is cohesive (e.g. examplenazi.com where they all espouse that ideology), we moderate individual accounts. This means that regardless of the status of Threads as a whole or the number of federating accounts, accounts like Libs of Tik Tok would never federate with Hachyderm for being in violation of our federating policy.

Community Collaboration

Community Votes

In the approximately 15 months since Hachyderm scaled, we have limited or suspended federation with 19 instances per month for a total of 285 instances. These instances in large part have been banned in order to pre-emptively protect our users. In almost every case, we know that these instances go against everything we stand for as a group. However, in a few of those cases, it’s more nuanced to determine if/when we should block an instance and how we should communicate those changes.

For example, if we blocked exampleillegal.com for illicit materials. We wouldn’t announce the change and we expect that none of our users will take issue with us blocking that material.

In contrast, on Jun 30, 2023, 22:12 UTC, we made the following announcement:

👋 Hello Hachydermia!

Due to the number of Twitter/Bird Site relay servers that have gone offline after the pricing update to Twitter’s API, we have recently started cleaning up servers that federate with our environment but appear to be offline.

If we removed a server that was functional and it has impacted your experience, please open a ticket on our GitHub issue page and we can evaluate reestablishing access.

Since making that decision, we had a few requests from users to re-evaluate 2 instances that we blocked but the other instances that we blocked remain blocked and the search box is clear of those invalid instances. While it was a change that was made for convenience and not protection, it is a change that had a large scale impact.

There are some cases that are more complicated and we would like to gather feedback from our community to determine which route best aligns with the entire community. We are releasing a new process to collect that information.

- Moderation team determines that we need a survey from the community

- Moderation team develops a multiple choice set of options for how to respond

- Moderation team will make a post informing the community about a survey

- A new announcement will be published

- Users will be able to respond to the announcement for 72 hours

- At the end of the 72 hours, the moderation team will collect the results from the community and close the poll.

This method isn’t perfect, but we are excited to continue to work on a method to collaborate with our community in convenient and meaningful ways.

Trusted reporters

Starting this month, the moderation team will be reaching out to select users to ask them to join our Trusted Reporters group. These users will be selected based upon the quality of the reports that they have submitted that have resulted in actions by the moderation team. This group of individuals will be given an opportunity to learn more about the moderation process. The goal is to ensure that community members are able to deliver the most meaningful reports to our moderation team. In exchange, these trusted reporters will have a more direct line of communication to a few members within the moderation team that can help assist in the event of a time critical need for moderator support.

How to become a trusted reporter

It’s important to note that Trusted Reporters will not have access to the moderation interface, admin interface, infrastructure, or tooling. The primary goal of the Trusted Reporters reporters program is to ensure the delivery of high quality reports and faster responses for time critical issues. As a result, the moderation team will select trusted reporters by reviewing users that submit quality reports that contain:

- What - Ensure that all of your reports include the posts that you are concerned about

- Why - Ensure that you provide a written explanation of your concern and categorize the report appropriately

Thanks to everyone in the Hachyderm family for the laughs, love, and support through 2023. We look forward to an incredible 2024!

Your Hachyderm Moderation Team!

A Minute from the Moderators

Hello and welcome to this month’s Moderator Minutes. Apologies for missing June, we have a blog post that we’ll post later this month as a belated post for June. The short version is we were completing that one while compiling for this one, and a lot is going on for the summer. So let’s get started!

This month we will cover: welcoming new users to Mastodon, volunteering with Hachyderm, decisions around Lemmy/kbin, and what’s going on with the Meta instance.

- New to Hachyderm and Mastodon? Welcome!

- Interested in volunteering? We’re growing our moderation team!

- What did we decide about Lemmy and kbin?

- Let’s talk about Meta.

- Hachyderm inbound and outbound communications

New to Hachyderm and Mastodon? Welcome!

We’re seeing an increase in Fediverse usage of users arriving from other platforms. Welcome new users! We have an explainer for How to Hachyderm in our documentation, which includes both Hachyderm specific information as well as links to common sources you’ll want to keep handy for general Mastodon or Fediverse information. Some things you’ll want to make sure to explore:

- Understanding home, local, and federated timelines

- Understanding how DMs work and their visibility (importantly: if you add someone to a DM thread they see the history, Mastodon doesn’t create a new thread).

- There’s a strong culture of content warning and alt-text (in our Accessible Posting doc) usage in the Fediverse. We do not expect anyone to be experts on these, only to iterate and improve over time.

As always, we encourage everyone to be welcoming to new transfers from other platforms. Everyone is encouraged to help new users learn the new tools and features available to them. Please keep in mind that shaming people for not knowing something goes against the ethos of the Hachyderm server, so please only enter conversations you can participate in that will foster the growth of others.

Interested in volunteering? We’re growing our moderation team!

Also, as a response to the growth we’re seeing, we’re scaling the Moderation team! If you’re interested in volunteering on the moderation team, please read on.

Hachyderm is operated by two teams: moderation and infrastructure. In an effort to protect the instance, we have implemented a SOD (separation of duties) policy, where team members can only operate in one team at a time. For those that know that they will be interested in volunteering with different teams over time, we ask that you commit to a given team for a minimum of three months (within reason). At this point in time, we are calling for volunteers to support the moderation team.

Hachyderm Moderators are expected to be able to create and maintain safe spaces. The easiest moderation decisions we make are around “banning the Nazis”. This can include literal Nazis, of course, but also extends to all forms of extremism and their “friendly federators”. It is more difficult and nuanced to handle genuine interpersonal conflict than to simply remove harmful content and sources, local or remote. In order to handle interpersonal conflict on the instance, understanding if there’s a path back into the community or not is important. In order to handle interpersonal conflict where there are one or more remote users, who have agreed to different instance rules, involves understanding which of Hachyderm’s rules are local and which are global.

As part of our current call for moderators, we included some Q&A that showcases this in the application form. It’s important to know that there are no right or wrong answers on the volunteer form and that everything we do here on the instance is covered in moderation training. What we’re looking for from you is just for you to explain to us how you’d interpret and handle the concepts and two examples we’ve provided. If you are interested in applying, please respond to our call for moderators here:

If you have any questions about volunteering in general, the application form, and so on please email us at admin@hachyderm.io.

What did we decide about Lemmy and kbin?

Many of you have been waiting for the results about whether or not Hachyderm will be running a Lemmy or kbin instance. The short, at the top, answer is:

We’ve decided that a Lemmy / kbin instance will actually need to be a Nivenly project, rather than be a sub-project of Hachyderm. There will be an upcoming announcement from Nivenly about this.

In addition to information about how to apply to Nivenly as a Lemmy or kbin instance, their post will include other project announcements and exciting status updates that they know everyone has been waiting for. While the Nivenly post will have more details about what they’re looking for specifically, we can share a bit of our experience to help as well.

As one of the first two (the other being Aurae) Nivenly Projects, we can share that two things to be aware of if you’re interested in scoping out the project: Nivenly will want to ensure that any projects brought on can commit to safe spaces and there will be expectations for what that means as well as projects that are interested in improving the community space overall. This can mean that an infra team will need to have one or more members (but not all) that are interested in contributing to the project’s software where / as applicable.

For anyone with preemptive questions about this, please reach out to Nivenly using their email: info@nivenly.org.

Let’s talk about Meta.

Lastly, let’s talk about Meta and Threads. We’ve received a lot of questions and discourse about this in general over a few different comms channels, including Hachyderm itself, email, and Nivenly’s Community Discord. By the nature of these different communication pathways, this means that essentially small bubbles of conversation have appeared that don’t have a lot of visibility. We’re consolidating some of the outcomes of those discussions here, for reference. And of course, since information is ongoing that means we’ve had to make a few revisions to this blog post while we were writing it to account for newly released information.

For this section, the first thing that we’ll be covering is how we’ll come to consensus about Meta’s instance, Threads. Then we’ll go into a bit about how Hachydermians can communicate their wants and needs to us, as well as a refresher on how we send out communications.

How the Hachyderm instance will reach consensus about Meta and communicate about it

How Hachyderm handles federating with other instances

Due to the size of our instance, we openly federate by default. This is not unchanging: as we research and become aware of instances that have a negative impact on the Hachyderm community or otherwise pose a risk, we can limit or fully defederate from other instances. The most common situation for this is when we research DarkFedi instances, though this is not exclusive.

In general, there are two sets of criteria that determine whether we federate with an instance. One set for moderation, the other for infrastructure. In both cases, the instance must be federating in order to prompt defederation research.

For moderation:

- The instance must not be a source of extremist content of any format

- The instance must not be a source of illegal content of any format

- The instance must not engage in trolling or brigading of other instances or users

- The instance must not stalk or otherwise harass other instances or users

- The instance must not monetize their users’ data without their informed consent or monetize the data of other users on the Fediverse without their informed consent

- The instance must not be a “friendly federator” with an instance engaging with one or more of the above

Note: in the above criteria, we specifically do not allow instances to stalk, target, and otherwise harass either instances or individuals. Users that target other users will be defederated from, and instances that target individuals will also be defederated from. We also evaluate “friendly federation”, which analyzes the federation impact of instances actively federating with instances who are engaging in these behaviors or who are joining in outright. We take steps, which can include defederation, to protect our space from “friendly federators” just as we do the source instances.

For infrastructure:

- The instance must not be a source of spam

- The instance must not be a source of excessive traffic, such as a denial of service (DDoS)

- The instance must not be a source of any other malicious traffic or activity, including but not limited to attempts to compromise the security of the servers or the data they store.

Hachyderm teams also differentiate if an activity is limited to a specific account or accounts, rather than instance-wide. To put it another way, “is there a spam account on the instance or is the instance being overrun by spam”. In order for action to be taken against an instance in its entirety, what we surface in our research must be consistent on the instance as a whole.

As a point of clarification: although an instance must be federating for us to evaluate if we should be federating with them or not, that instance does not need to be directly engaging with Hachyderm in any way to prompt our research. The vast majority of instances that we end up researching are found proactively.

The current status of Meta / Threads

Meta formally released their Threads product this week. Currently, Threads is not federating, and cannot be defederated from until it does. Current news sources (e.g. this TechCrunch article) indicate that Meta does plan to federate with Threads and support the ActivityPub protocol, which is in use on the Fediverse, but is not doing so at this time. Less than a day into launch, and there are already reports that Twitter is threatening to sue Meta over intellectual property. (Ars Technica article here.) Basically: it launched, and there is uncertainty to the product’s future.

If and when Threads starts federating, similar to other instances on the Fediverse, Hachyderm will evaluate our federation status using the above criteria. If Threads is found to be a source of harm or risk to the Hachyderm community or Hachyderm itself, then we would defederate from them.

Several Hachydermians have indicated an interest in the above analysis and how they will know what our stance is. As a reminder, we send out announcements using one or more of the following:

- Site wide announcements

- Posts on the Hachyderm account, which are cross posted to the Nivenly Community Discord

- Blog posts

Due to high user impact of any decisions on Meta / Threads, Hachydermians should expect:

- A post from our Hachyderm account when research has begun.

- A blog post with the results of our research once it’s concluded. We will announce the blog post via a site-wide announcement and a post on the Hachyderm Hachyderm account.

Once Threads starts federating, we will do research and commit to take action, with initial announcement, within two weeks. We will follow up with a more detailed blog post within 30 days of the decision.

As a reminder, any Hachydermian can bring up questions, concerns, and requests for changes at any time. This means that regardless of the decision that is ultimately made regarding Threads, users can voice their concerns and request that decision be reversed.

Resources for finding out more about the implications of Meta / Threads

We’ve received some Q&A over a few different channels as more information started coming out over the course of June. Some of the questions asked around what would happen if the Meta instance / Threads was a source of extremism, illegal content, and so forth. The answer to all of these is the same as the above: we block and ban users and instances that engage in those activities.

There are also lots of questions about “what would happen if”. For example, what would happen if the Meta instance becomes large or starts federating? There is also a lot of speculation around concern that Meta could deploy what’s called the “embrace, extend, extinguish” strategy against ActivityPub. This basically means that Meta would adopt it, start to build it out, and then (try to) eliminate its use.

Another concern in this area is what would happen with user data. To state it clearly: Hachyderm does not and will not ever sell user data. Due to the consequences of federation, we also cannot federate with instances that sell user data.

This means that if Hachyderm does ultimately federate with Threads, if and when they start federating, it will be because they pass all the above tests and, importantly, doing so does not result in Hachydermians data being used or sold without their consent. If Hachyderm does not ultimately federate with Threads, then it will be because the instance violated one or more of our policies for federation.

As the situation is still evolving, there is a lot of speculation to be answered. The Mastodon project has a blog post that answers some of these, including what can (and cannot) be done with ActivityPub or the Mastodon software. Similarly, Nexus of Privacy is writing a deep dive into issues and concerns with Fediverse Privacy and Threat Modeling. The article is still a draft, but already contains great points to consider if you or your instance are not doing so already.

Hachyderm inbound and outbound communications

A few quick reminders for how to communicate with the Hachyderm maintainers, if you have questions or concerns or need to request changes.

Reminder that we use Github Issues for consolidated, searchable, discussion threads

We use Github Issues as a means of communication - including intentionally leaving some threads open as Community Discussions. For 1:1 conversations, please feel free to continue to use email and Hachyderm’s Hachyderm account. We’ve also created a Hachyderm Maintainers Discord user that we use for Q&A in Nivenly’s Community Discord. That said, for wider conversations please consider using the Github Issues, as other users can search for and join in on the conversation! We mention this here mostly because Github Issues is the only place we haven’t received Q&A about Meta yet.

How we communicate site-wide

We also wanted to provide a refresher for how we communicate anything that is user impacting, including decisions, outages, and so forth, as it’s relevant to both the Meta Q&A and our communications in general. The tools we use to send announcements are one or more of the following:

- Hachyderm site announcements

- Posts on Hachyderm’s Hachyderm account, which are also cross posted in the Hachyderm category of Nivenly’s Community Discord

- Blog posts and documentation

All site announcements are also cross posted as a post on Hachyderm’s Hachyderm account. We include blog posts when there is additional detail that needs to be included, for example the Crypto Spam incident from May 2023.

We take into account how high the user impact of a decision is before choosing which, or all, of the communication paths to use. So in a case where the user impact is high you can expect to see site announcements and Hachyderm posts, as well as a blog post if additional detail is needed; whereas in situations where there is low to no user impact we’d only make a Hachyderm post if one is needed.

For 1:1 communications, like Q&A and other discussions that are not announcements, we use:

- The Hachyderm Hachyderm account

- The Hachyderm Maintainers Github account

- The Hachyderm Maintainers Discord account

- Our email, which is admin@hachyderm.io

We have communications documented on our Reporting and Communications documentation page for reference.

A Minute from the Moderators

Hello Hachydermia! It’s time for, you guessed it, the monthly Moderator Minute! Recently, our founder and former admin stepped down. As sad as we are to see her go, this does provide an excellent segue into the topics that we wanted to cover in this month’s Moderator Minute!

- The big question: will Hachyderm be staying online?

- Moderation on a large instance

- Harm prevention and mitigation on large instance

The big question: will Hachyderm be staying online?

Yes!

At Hachyderm we have been scaling our Moderation and Infrastructure teams since the Twitter Migration started to land in November 2022. Everyone on both teams is a volunteer, so we intentionally oversized our teams to accommodate high fluctuations in availability. Each team has a team lead and 4 to 10 active members at any given time, meaning Hachyderm is a ~20 person org. What this means for the moderation team specifically is the topic of today’s blog post!

Moderation on a large instance

Large instances like ours are mostly powered by humans and processes. And computers, of course. But mostly humans.

And for a large instance, there needs to be quite a few humans. In our case, most of our current mods volunteered as part of our Call for Volunteers back in December 2022.

How moderators are selected

Moderators must first and foremost be aligned with the ethos of our server. That means they must agree with our stances on no racism, no white supremacy, no homophobia, no transphobia, etc. Beyond that baseline, moderators are also chosen for:

- Their lived experiences and demographics

- Their experience with community moderation

For demographics: it is important to ensure that a wide variety of lived experiences are representative on the moderation team. These collective experiences and voices allow us to discuss, build, and enforce the policies that govern our server. Ensuring that there are multiple backgrounds, including race, gender, orientation, country of origin, language, and so on helps to ensure that multiple perspectives contribute.

For prior experience: there was also an intentional mix of experience levels on the moderation team. Ensuring that there are experienced mods also means that we have the bandwidth to onboard new, less experienced moderators. Being able to lay the groundwork for mentorship and onboarding is crucial for a self-sustaining organization.

Moderation onboarding and continuous improvement

Before moderators can begin acting in their full capacity, they must:

- Agree to the Moderator Covenant

- Be trained on our policies

- This includes the server rules, account policies, etc.

- Practice on inbound tickets

- Practice tickets have feedback from the Head Moderator and the group

The first two points go hand-in-hand. Our server rules outline the allowed and disallowed actions on our server. Our Moderator Covenant governs how we interpret and enforce those rules.

When practicing, new moderators are expected to write their analysis of the ticket including how they understand the situation, what action(s) they would or would not take in the given scenario, as well as why they are making that recommendation. When training the first group of moderators, this portion of the process was intended to last a week, but it worked out so well that we have kept the process. This means it is common for multiple moderators to see an individual ticket and asynchronously discuss prior to taking an action. This informal, consensus-style review has led to our team being able to continue to learn from each individual’s experiences and expertise.

When moderators make a mistake

We do our best, but are human and are thus prone to mistakes. Whenever something like this happens, we:

- May follow up with the impacted user or users

- Will review the policy that led to the error

When we make mistakes, we will always do our best by the user(s) directly impacted. This means that we will take ownership for our mistakes, apologize to the impacted user(s) if doing so will not cause further harm, and also review any relevant policies to ensure it doesn’t happen again.

Harm prevention and mitigation on large instance

How we handle moderation reports is driven by the enforced stance that moderation reports are harm already done. (We mentioned this stance in our recent postmortem as well.) Essentially, this means that if someone has filed a report because harm has been done, then that harm has been done.

How we determine what to do next depends on several factors, including the scope and severity of what has been done. It also depends on the source of the harm (local vs remote).

Using research as a tool for harm prevention

To say it first: in cases of egregious harm that originates on our server, the user is suspended from our server.

Reports of abuse originating from one of our own are exceedingly rare. More commonly, the reports of egregious harm come from remote sources. The worst cases are what you’d likely expect and are easy to suspend federation with.

The Hachyderm Moderation team also does a lot of proactive research regarding the origins of abusive behavior. The goal of our research is to minimize, and perhaps one day fully prevent, these instances’ ability to interact with our instance either directly or indirectly. To achieve this, we research not only the instances that are the sources of abusive behavior and content, but also those actively federating with them. To be clear: active here means active participation. We take this research very seriously and are doing it continuously.

Nurturing safe spaces by requiring active participation in moderation

The previous section focused on how we handle the worst offenders. What about everyone else? When someone Well Actuallys or doesn’t listen, or doesn’t respond properly when a boundary has been specified? (For information about setting and maintaining boundaries, please see our Mental Health doc.)

In situations where the situation being reported is not an egregious source of harm, the Hachyderm Moderation team makes heavy use of Mastodon’s freeze feature. The way we use it is to send the user a message that details what they were reported for, including their posts as needed, and use the freeze to tell them what we need from them to restore normal activity on their account. To prevent moderation issues from going on for long periods of time, users must respond in a given time frame and then perform the required action(s) in a given time frame.

What actions we require are situationally dependent. Most commonly, we request that users delete their own posts. We do this because we want to nurture a community where individuals are aware of, and accountable for, their actions. When moderators simply delete posts, the person who made the post is not required to even give the situation a second thought.

Occasionally, we may nudge the reported user a little further. In these cases, we include some introductory information in our message and also request that the person do a brief search on the topic as a condition of reinstating the account. The request usually looks a bit like this:

We ask that you do a light search on these topics. Only 5-15 min is fine.

The goal of this request is that we can all help make our community a safer place just by taking small steps to increase our awareness of others in our shared space.

If you agree to the above by filing an appeal, we will unfreeze your account. If you have further questions, please reach out to us at admin@hachyderm.io .

– The Hachyderm Mods

The reason we ask for such a brief search is because we do not expect someone to become an expert overnight. We do expect that all of us take small steps together to learn and grow.

As a point of clarification: we only engage in the freeze and restore pattern if the reported situation does not warrant a more immediate and severe action, such as suspending the user from Hachyderm.

And that’s it for this month’s Moderator Minute! Please feel free to ask the moderation team any questions about the above, either using Hachyderm’s Hachyderm account, email, or our Community Issues. We’ll see you next month! ❤️

Stepping Down From Hachyderm

Stepping Down From Hachyderm

Recently I abruptly removed myself from the “Admin” position of Hachyderm. This has surfaced a number of threads about me, Hachyderm, and the broader Fediverse. Today I would like to offer an apology as well as provide some clarity. There are a lot of rumors going around and I want to address some of them.

Before I get into why I am stepping down as admin, I want to be crystal clear: Hachyderm allows mutual aid. Hachyderm has always allowed mutual aid.

There has never been a point in Hachyderm’s history when mutual aid was not allowed. What we have never allowed on Hachyderm, is spam. Which we see a lot of, including phishing. What we have changed positions on, is corporate and organizational fundraising.

For us to say “Hachyderm does allow mutual aid,” and “Hachyderm does not allow spam or organizational fundraising,” requires the mods to define “Mutual Aid,” “Spam,” and “Organizational fundraising.” Once defined, Hachyderm need to come up with policies for these concepts, and instruct moderators to manage them. We do not always get this right, and we rely on the community’s help to tell us when we get it wrong, and how we can be more precise in our language and our actions.

One of the things I’ve found difficult about engaging in moderation discussions on the Fediverse, is the inability to agree on the facts at hand. I’ve always been very willing to take accountability for my actions, and take feedback on how to improve and make things better for all of our users. But that’s not possible to do when we can’t even agree on the topic at hand, and when we don’t engage with each other. In this most recent incident, I’ve had to spend significantly more time re-stating that Hachyderm does support mutual aid, and less time focusing on improving our policies and communications, so that our anti-spam and anti-organizational fundraising policies don’t harm members of our community that we want to support.

I would be very happy to have conversations about how Hachyderm’s policy, language, and enforcement were wrong, caused harm, and need to be fixed. That is a conversation worth having. I was also happy to have conversations around our stance on organizational fundraising. But the conversations about how I personally “contribute to trans genocide because I don’t support mutual aid,” are untrue, hurtful, and in my opinion, unproductive. Yes, this is extra hurtful to me, as I have experienced homelessness, and my own dependency on mutual aid as a transgender person. This is dear to my heart.

In many cases, decisions by other admins and mods were made on the assumption that I don’t support mutual aid, and this has been hard for me to reconcile.

I want to address something else, as my departure has some folks in our Hachyderm community and the broader Fediverse concerned about who will lead moderation after I leave. Moderation at Hachyderm will continue to be led by our lead moderator, as it has always been. I have never been the moderator of Hachyderm. In fact, on several occasions, I was moderated by the moderation team, including being asked to remove my post on Capitalism. This is as it should be, and I accepted the decisions of the moderation team on each occasion. In a healthy community, no one is above the rules.

Regarding the post on BlueSky, my hope was to push BlueSky towards an open identity provider such that the rest of the Fediverse could leverage the work. This would address the problem with people not owning their own identity/authentication, which is something that is important to many Hachydermians. People are already asking about this, and the AT protocol in general.

Effectively my intention was to utilize BlueSky’s hype and resources on behalf of federated identity such that people can own their own identity. Mastodon also has open issues about this. At this point I am exhausted and am abandoning BlueSky as well. I am taking a break from all social media at this time to protect my mental health.

The Hachyderm service has grown unexpectedly and I have tried my best to build a strong organization to live on without me. I have always intended on stepping down such that the collective could continue without me. Part of relinquishing control involved slowly stepping back one position at a time. It is up to the collective now to manage the service moving forward, and I deeply believe there are wonderful people in place to manage the service.

A Minute from the Moderators

Hello and welcome to April! This month we’ll be reviewing the account verification process we rolled out as well as two more classic moderation topics: how to file a report and what to do if you’re moderated.

- Account Verification

- How to file outstanding moderation reports

- Meter yourself when filing reports

- When you’ve been moderated

Account Verification

Throughout the month of March we started circulating an account verification process that launched. What does this mean, how do we use it, and what does it tell Hachydermians?

Mastodon account verification is like an identity service

Verification in the Mastodon context is similar to an ID verification service.

When you build your profile you have four fields that are labeled “profile

metadata”. When you include a URL that you have a rel=me link to your

Mastodon profile on, then that URL highlights green with a corresponding

green checkmark. In that case, the URL is verified: confirming that the person

who has control of the account also has control of the domain.

Hachyderm verification makes verification visible on an account profile

Since some specialized accounts are restricted on Hachyderm, we decided to make it more immediately visible which accounts are approved or not. As part of these discussions, we also extended the verification process to even non-restricted specialized accounts.

In order to verify, specialized accounts use the process outlined on our Account Verification page which includes agreeing to the Specialized Account Expectations and using our Community GitHub issues to submit the request. Once approved, we add their Hachyderm account to an approval page we created for this process. For an example of what the end result looks like, take a look at one of our first corporate accounts, Tailscale:

Specialized accounts should be verified

As a reminder, the only accounts we’re currently requiring to be verified are:

- Corporate accounts

- Bot accounts

- Curated accounts

That said, the account verification process is open to all specialized accounts. This includes but is not limited to: non-profits, conferences, meetups, working groups, and other “entity” based accounts.

Account verification is not open to individual users at this time. That said, if you are an independent contractor or similar type of individual / self-run business please read on.

We support small orgs, startups, self-run businesses, non-profits, etc.

Please email us at admin@hachyderm.io if this applies to your account or an account you would like to create. This is the grey area for all accounts that due to size, model, or “newness” don’t fit cleanly into the account categories we’ve tried to create.

In particular, if you suspect you might fit our criteria for a corporate account but the pricing model would be a burden for you: please still reach out! We’re happy to help and try to figure something out.

How to file outstanding moderation reports

First of all: thank you to everyone for putting your trust in us and for sending reports our way. Reports on any given day or week can vary and include mixtures of spam, on and off server bad behavior, and so on. When you send reports our way, here are the main things to keep in mind so that your reports are effective.

Please see our Reporting and Communication doc, which details Hachyderm specific information, and our Report Feature doc, which shows what we see when we receive a report, for reference.

Always include a description with your own words

You should always include a description with your report. It can be as succinct as “spam” or more descriptive like “account is repeatedly following / unfollowing other users”. You should include a description even if the posts, when included, seem to speak for themselves. If you are reporting content in a language other than English, please supply translations for any dog whistles or other commentary that a translation site will likely miss in a word-for-word translation.

Mastodon also deletes posts from reports more than 30 days old. So in the event that we need to check on a user and/or domain that has been reported more than once, but infrequently, the added context can also help us capture information that is no longer present.

(Almost) Always include relevant posts

If you are reporting a user because of something they have posted, you should (almost) always include the posts themselves. When a post is reported, the post is saved in the report even if the user’s home instance deletes the posts. If the posts are not included, and the user and/or their instance mods delete the posts, then we have an empty report with no additional context.

Please feel free to use your best judgement when choosing to attach posts to a report or not. In the rare situation where you are reporting extreme content, especially with imagery, you can submit a report without posts but please ensure that you have included the context for what we can expect when we investigate the user and/or domain.

Be clear when you are forwarding a report (or not)

When you file reports for users that are off-server, you will have the option to forward the report to the user’s server admins. When a report is not forwarded, only the Hachyderm moderation team sees it. Reports forward to remote instance admins by default. If you are choosing not to forward a report for a remote user, please call it out in your comments. Although we can see when a report isn’t forwarded, the added visibility helps.

There will be times when a reported user’s infraction falls under the purview of their instance moderators and whatever server rules that user has agreed to and may be in violation of. Typically, we will only step in to moderate these situations when we need to de-federate with a remote user and/or instance completely.

Meter yourself when filing reports

We appreciate everyone who takes the time to send us a report so we can work towards keeping the Hachyderm community safe. Make sure when you are doing so that you are being mindful of your own mental health as well. As a moderation team, we are able to load balance the reports that come through to protect us individually from burnout or from seeing content that can strongly, negatively, impact us on a personal level.

Even in situations where there is yet another damaging news cycle, which in turn creates a lot of downstream effects, individuals should avoid taking on what it takes a team to tackle. In these situations, please balance the reports you send with taking steps to separate yourself from continued exposure to that content. For tips and suggestions about how to do this, please see our March Moderator Minute and our Mental Health doc.

When you’ve been moderated

Being moderated is stressful! We understand and do our best to intervene only when required to maintain community safety or when accounts need to be nudged to be in alignment with rules for their account type and/or server rules.

For additional information on the below, please see both our Reporting and Communication doc and our Moderation Actions and Appeals doc.

Take warnings to heart, but they do not require an appeal

Warnings are only used as a way to communicate with you using the admin tools. They are not accrued like a “strike” system, where something happens if you exceed a certain number. Since we only send warnings when an account needs a nudge, either a small rule clarification or similar, they do not need to be appealed. Appeals to warnings will typically receive either no action or a rejection for this reason.

Always include your email when appealing an account restriction

If your account has been restricted in some way, e.g. either frozen or suspended, then you will need to file an appeal to open a dialogue for us to reverse that decision. You should always include how we can email you in your appeal: the admin UI does not let us respond to appeals. We can only accept (repeal) or reject (keep) the decision.

Let us know if we’ve made a mistake

If we’ve made an error in moderating your account: apologies! We do our best, but mistakes can and will happen. If your account has been restricted, please file an appeal the same as in the above: by including the error and your email so we can follow up with you as needed. Once we have the information we need we can reverse the error.

A Minute from the Moderators

We’ve been working hard to build out more of the Community Documentation to help everyone to create a wonderful experience on Hachyderm. For the past month, we’ve focused most heavily on our new How to Hachyderm section. The docs in this section are:

When you are looking at these sections, please be aware that the docs under the How to Hachyderm section are for the socialized norms around each topic and the subset of those norms that we moderate. Documentation around how to implement the features are both under our Mastodon docs section and on the main Mastodon docs. This is particularly relevant to our Content Warning sections: How To Hachyderm Content Warnings is about how content warnings are used here and on the Fediverse, whereas Mastodon User Interface Content Warnings is about where in the post composition UI you click to create a content warning.

Preserving your mental health

In our new Mental Health doc, we focus on ways that you can use the Mastodon tools for constraining content and other information. We structured the doc to answer two specific questions:

- How can people be empowered to set and maintain their own boundaries in a public space (the Fediverse)?

- What are the ways that people can toggle the default “opt-in”?

By default, social media like Mastodon / the Fediverse, opts users in to all federating content. This includes posts, likes, and boosts. Depending on your needs, you may want to opt out of some subsets of that content either on a case-by-case basis, by topic, by source, or by type. Remember:

You can opt out of any content for any reason.

For example, you may want to opt out of displaying media by default because it is a frequent trigger. Perhaps the specific content warnings you need aren’t well socialized. Maybe you are sensitive to animated or moving media. That said, perhaps media isn’t a trigger - you just don’t like it. Regardless of your reason, you can change this setting (outlined in the doc) whenever you wish and however often as meets your needs.

Hashtags and Content Warnings

Our Hashtags and Content Warnings docs are to help Hachydermians better understand both what these features are and the social expectations around them. In both cases, there are some aspects of the feature that people have encountered before: hashtags in particular are very common in social media and content warnings mirror other features that obscure underlying text on sites like Reddit (depending on the subreddit) and tools like Discord.

Both of these features have nuance to how they’re used on the Fediverse that might be new for some. On the Fediverse, and on Hachyderm, there are “reserved hashtags”. These are hashtags that are intended only for a specific, narrow, use. The ones we moderate on Hachyderm are FediBlock, FediHire, and HachyBots. For more about this, please see the doc.

Content warnings are possibly less new in concept. The content warning doc focuses heavily on how to write an effective content warning. Effective content warnings are important as you are creating a situation for someone else to opt in to your content. This requires consent, specifically informed consent. A well written content warning should inform people of the difference between “spoilers”, “Doctor Who spoilers”, and “Doctor Who New Year’s Special Spoilers”. The art of crafting an effective content warning is balancing what information to include while also not making the content warning so transparent that the content warning is the post.

Notably, effective content warnings feature heavily in our Accessible Posting doc.

Accessible Posting

Our Accessible Posting doc is an introductory guide to different ways to improve inclusion. It is important to recognize there are two main constraints for this guide:

- It is an introductory guide

- The Mastodon tools

As an introductory guide, it does not cover all topics of accessibility. As a guide that focuses on Mastodon, the guide discusses the current Mastodon tools and how to fully utilize them.

As an introductory guide, our Accessibility doc primarily seeks to help users develop more situational awareness for why there are certain socialized patterns for hashtags, content warnings, and posting media. We, as moderators of Hachyderm, do not expect anyone to be an expert on any issue that the doc covers. Rather, we want to help inspire you to continue to learn about others unlike yourself and see ways that you can be an active participant in creating and maintaining a healthy, accessible, space on the Fediverse.

Content warnings feature heavily on this doc. The reason for this is Mastodon is a very visual platform, so the main ways that you are connecting with others who do not have the same experience of visual content is by supplying relevant information.

There will always be more to learn and more, and better, ways to build software. For those interested in improving the accessibility features of Mastodon, we recommend reviewing Mastodon’s CONTRIBUTING document.

More to come

We are always adding more docs! Please check the docs pages frequently for information that may be useful to you. If you have an idea for the docs, or wish to submit a PR for the docs, please do so on our Community repo on GitHub.

April will mark one month since we launched the Nivenly Foundation, Hachyderm’s parent org. Nivenly’s website is continuing to be updated with information about how to sponsor or become a member. For more information about Nivenly, please see Nivenly’s Hello World blog post.

The creation of Nivenly also allowed us to start taking donations for Hachyderm and sell swag. If you are interested in donating, please use either our GitHub Sponsors or one of the other methods that we outline on our Thank You doc. For Hachyderm swag, please check out Nivenly’s swag store .

Decaf Ko-Fi: Launching GitHub Sponsors et al

Since our massive growth at the end of last year, many of you have asked about ways to donate beyond Nóva’s Ko-Fi. There were a few limitations there, notably the need to create an account in order to donate. There were a few milestones we needed to hit before we could do this properly, notably we needed to have an EIN in order to properly receive donations and pay for services (as an entity).

Well that time has come! Read on to learn about how you can support Hachyderm either directly or via Hachyderm’s parent organization, the Nivenly Foundation.

First things first: GitHub Sponsors

Actual Octocat from our approval email

As of today the Hachyderm GitHub Sponsors page is up and accepting donations! Using GitHub Sponsors you can add a custom amount and donate either once or monthly. There are a couple of donation tiers that you can choose from as well if you are interested in shoutouts / thank yous either on Hachyderm or on our Funding and Thank You page. In both cases we’d use your GitHub handle for the shoutout.

The shoutouts and Thank You page

#ThankYouThursday is a hashtag we’re creating today to thank users for their contributions. Most posts for #ThankYouThursday happen on Hachyderm’s Hachyderm account, but higher donations will be elible for shoutouts on Kris Nóva’s Hachyderm.

- $7/mo. and higher

- Get a sponsor badge on your GitHub profile

- $25/mo. and higher or $100 one-time and higher

- Get a sponsor badge on your GitHub profile

- Get a shoutout on the Hachyderm account’s quarterly #ThankYouThursday

- $50/mo. and higher or $300 one-time and higher

- Get a sponsor badge on your GitHub profile

- Get a shoutout on the Kris Nóva’s account’s quarterly #ThankYouThursday

- $1000 one-time and higher

- Get a sponsor badge on your GitHub profile

- Get a shoutout on the Hachyderm account’s quarterly #ThankYouThursday

- Be added to the Thank You List on our Funding page

- $2500 one-time and higher

- Get a sponsor badge on your GitHub profile

- Get a shoutout on Kris Nóva’s quarterly #ThankYouThursday

(All above pricing in USD.)

A couple of important things about the above:

- All public announcements are optional. You can choose to opt-out by having your donation set to private.

- By default we’ll use your GitHub handle for shoutouts. This is easier than reconciling GitHub and Hachyderm handles.

- We may adjust the tiers to make the Thank Yous more frequent.

Right now the above tiers are our best guess, but we may edit the #ThankYouThursday thresholds in particular so that we can keep a sustainable cadence. Thank you for your patience and understanding with this ❤️

And now an update for the Nivenly Foundation

For those who don’t know: the Nivenly Foundation is the non-profit co-op that we’re founding for Hachyderm and other open source projects like Aurae. The big milestone we reached here is that 1 ) we’re an official non-profit with the State of Washington and 2 ) we have a nice, shiny, EIN which allowed us to start accepting donations to both the Nivenly Foundation as well as its two projects: Aurae and Hachyderm. For visibility, here are all the GitHub sponsor links in one place:

It is also possible to give a custom one-time donation to Nivenly via Stripe:

Right now only donations are open for Nivenly, Aurae, and Hachyderm. After we finalize Nivenly’s launch, Nivenly memberships will also be available for individuals, maintainers, and what we call trade memberships for companies, businesses, and business-like entities.

What do Nivenly Memberships mean for donations?

Right now, donations and memberships are separate. That means that you can donate to Hachyderm and, once available, join Nivenly as two separate steps. As Nivenly’s largest project, providing governance and funding for Hachyderm uses almost all of Nivenly’s donations. As we grow and include more projects this is likely to shift over time. As such, we are spinning up an Open Collective page for Nivenly that will manage the memberships and also provide a way for us to be transparent about our budget as we grow. Our next two big milestones:

- What you’ve all been waiting for: the public release of the governance model (almost complete)

- What we definitely need: the finalization of our 501(c)3 paperwork with the IRS (in progress)

As we grow we’ll continue to post updates. Thank you all so much for your patience and participation 💕

P.S. and update: What’s happening with Ko-fi?

We are currently moving away from Kris Nóva’s Ko-fi as a funding source for Nivenly and Hachyderm et al. We’ve created a new Ko-fi account for the Nivenly Foundation itself:

Kris Nóva’s Ko-fi is still live to give people time to migrate Nivenly-specific donations (including those for Hachyderm and Aurae) from her Ko-fi to either GitHub sponsors, Nivenly’s Ko-fi, Stripe or starting a Nivenly co-op general membership via Nivenly’s Open Collective page as those become ready (which should be soon). We’ll still be using Nivenly-specific funds from her Ko-fi for Nivenly for the next 30-60 days and will follow up with an update as we start to stop that (manual 😅) process.

Growth and Sustainability

Thank you to everyone who has been patient with Hachyderm as we have had to make some adjustments to how we do things. Finding ourselves launched into scale has impacted our people more than it has impacted our systems.

I wanted to provide some visibility into our intentions with Hachyderm, our priorities, and immediate initiatives.

Transparency Reports

We intend on offering transparency reports similar to the November Transparency Report from SFBA Social. It will take us several weeks before we will be able to publish our first one.

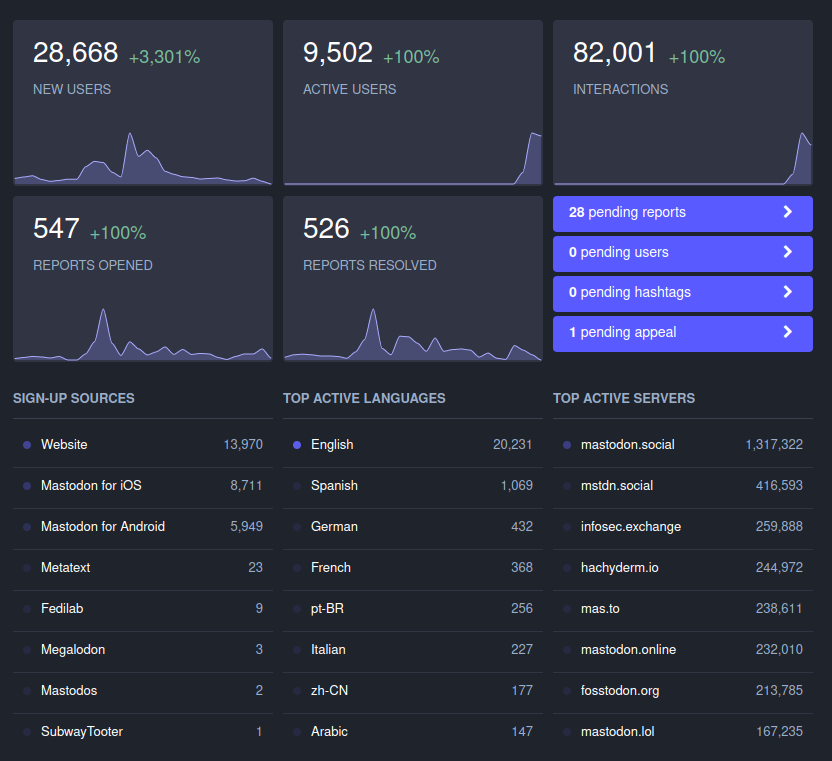

The immediate numbers from the administration dashboard are below.

Donations

On January 1st, 2023 we will be changing our financial model.

Hachyderm has been operating successfully since April of 2022 by funding our infrastructure from the proceeds of Kris Nóva’s Twitch presence.

In January 2023 we will be rolling out a new financial model intended to be sustainable and transparent for our users. We will be looking into donation and subscription models such as Patreon at that time.

From now until the end of the year, Hachyderm will continue to operate using the proceeds of Kris Nóva’s Twitch streams, and our donations through the ko-fi donation page.

Governing Body

We are considering forming a legal entity to control Hachyderm in January 2023.

At this time we are not considering a for-profit corporation for Hachyderm.

The exact details of what our decision is, will be announced as we come to conviction and seek legal advice.

User Registration

At this time we do not have any plans to “cap” or limit user registration for Hachyderm.

There is a small chance we might temporarily close registration for small limited periods of time during events such as the DDoS Security Threat.

To be clear, we do not plan on rolling out a formal registration closure for any substantial or planned period of time. Any closure will be as short as possible, and will be opened up as soon as it is safe to do so.

We will be reevaluating this decision continuously. If at any point Hachyderm becomes bloated or unreasonably large we will likely change our decision.

User Registration and Performance

At this time we do not believe that user registration will have an immediate or noticeable impact on the performance of our systems. We do not believe that closing registration will somehow “make Hachyderm faster” or “make the service more reliable”.

We will reevaluating this decision continuously. If at any point the growth patterns of Hachyderm changes we will likely change our decision.

Call for Volunteers

We will be onboarding new moderators and operators in January to help with our service. To help with that, we have created a short Typeform to consolidate all the volunteer offers so it is easier for us to reach back out to you when we’re ready:

The existing teams will be spending the rest of December cleaning up documentation, and building out this community resource in a way that is easy for newcomers to be self sufficent with our services.

As moderators and infrastructure teams reach a point of sustainability, each will announce the path forward for volunteers when they feel the time is right.

The announcements page on this website, will be the source of truth.

Our Promise to Our users

Hachyderm has signed The Mastodon Server Covenant which means we have given our commitment to give users at least 3 months of advance warning in case of shutting down.

My personal promise is that I will do everything in my power to support our users any way I can that does not jeopardize the safety of other users or myself.

We will be forming a broader set of governance and expectation setting for our users as we mature our services and documentation.

Sustainability

I wanted to share a few thoughts on sustainability with Hachyderm.

Part of creating a sustainable service for our users will involve participation from everyone. We are asking that all Hachydermians remind themselves that time, patience, and empathy are some of the most effective ways in creating sustainable services.

There will be some situations where we will have to make difficult decisions with regard to priority. Often times the reason we aren’t immediately responding to an issue isn’t because we are ignoring the issue or oblivious to it. It is because we have to spend our time and effort wisely in order to keep a sustainable posture for the service. We ask for patience as it will sometimes take days or weeks to respond to issues, especially during production infrastructure issues.

We ask that everyone reminds themselves that pressuring our teams is likely counter productive to creating a sustainable environment.

Leaving the Basement

This post has taken several weeks in the making to compile. My hope is that this captures the vast majority of questions people have been asking recently with regard to Hachyderm.